Traditional music research

For decades, we — music radio programmers — have tested our music libraries through Auditorium Music Tests and Call-outs. Every decision was based on a reduced panel of listeners, telling us — through a dial or a questionnaire — how they would react if the songs presented to them in the form of 8 seconds hooks were played on-air, so we could estimate the impact on our total audience.

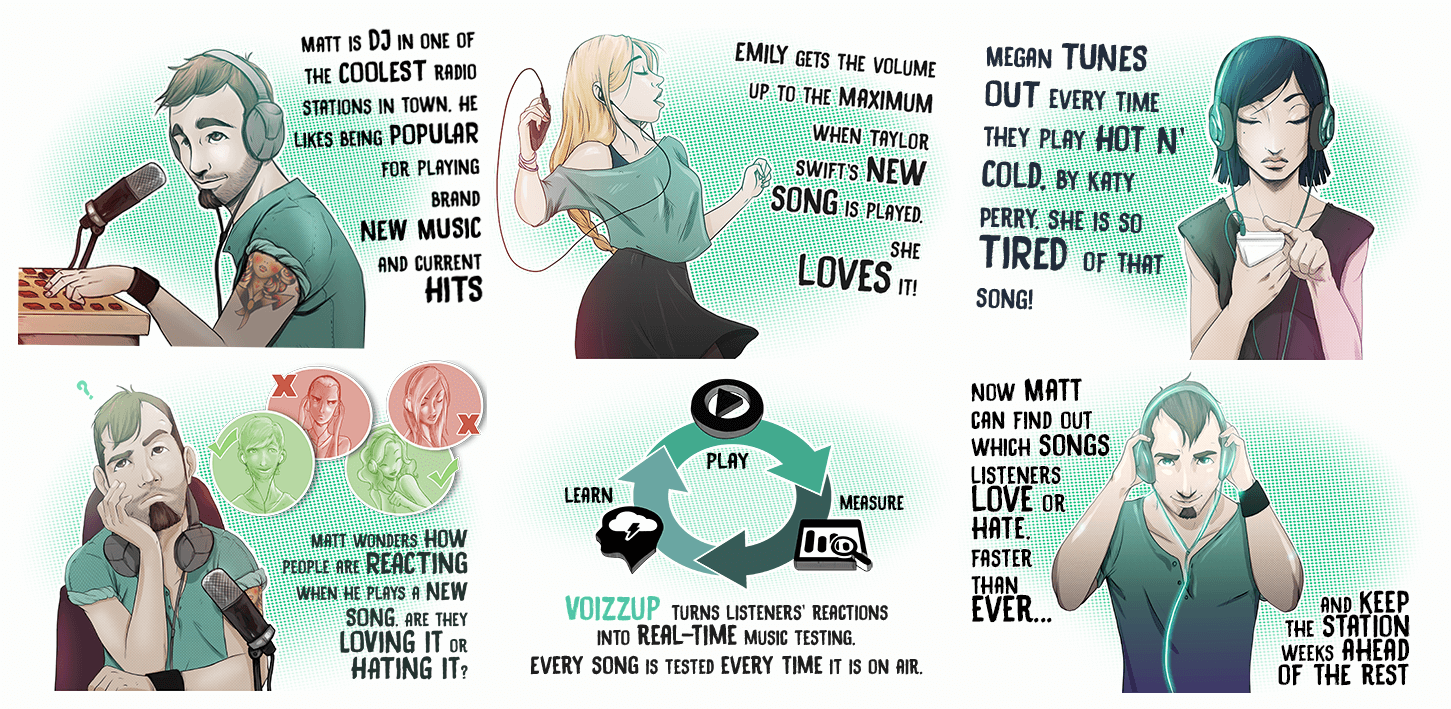

Estimations based on the perception of a tiny portion of the audience. But it made sense, it was all we had. Let’s examine some situations, probably very familiar to those of you who work in music radio programming:

What’s that smell of smoke? Oh, it’s just another burnt song!

- For months we’ve been playing this song, a super-tester. AMT after AMT the song has been always on the top, showing very healthy levels of passion. (From 120 persons in the auditorium, 86 said they liked the song. 45 said the song was one of their favourite)

- After one year and a half playing the song in relatively high rotations — we and the rest of the stations in our market — the burn suddenly increases. (23 people say they are tired of the song and they would stop listening if the song is played. However 68 persons still say they like the song)

- Is a 19% burn acceptable? Many listeners still like it… Which indicator should overrule? Should we stop scheduling the song, or reducing the rotations should be just fine? What do we do?

Men Are from Mars, Women Are from Venus

- New artist, new song. It was hot in US, so we decided to give it a chance. After 120 spins we take it to call-out for first time and the results show the song doesn’t perform very well among women. (Out of the 80 persons interviewed , 48 said they don’t like the song. From those 48, 27 are women)

- If 67% of our female listeners don’t like the song, should we just forget about it? Not appropriate for our format? Should we keep playing it and give it another try in two weeks? What do we do?

Nothing. Nada.

- Similar situation. We receive the first results of a new track which we’ve given 105 rotations in the last two weeks. The only thing relevant that we see in its first results is that the unfamiliarity is pretty high. (From 80 respondents in the call-out, 56 say they don’t know the song. 12 persons like it. Other 12 don’t).

- Should we increase its rotations to make it more recognisable? Do we, on the contrary, lower the turnover giving it a less aggressive and more sustainable rotation? Should we leave it as it is and hope it gains more familiarity soon as more stations play it? Or we just stop programming it and let other stations make it grow? What-do-we-do?

Data-driven music test

In previous articles I’ve extensively expressed my belief that data analysis enables a leap forward in radio programming and research.

Probably the most tangible and definite application of data analysis in radio would be a data-driven music test.

It addresses three issues existing in traditional music research:

- It doesn’t require a laboratory environment, it captures reactions from the audience during natural listening.

- It’s not based on perceptions or responses obtained through a test dial or a questionnaire. The existing mobile apps of the radio stations can easily convert listeners’ smartphones into devices capable of capturing their actual reactions.

- An ATM usually consists of sessions with 60 respondents. Perhaps some more in a call-out. Oppositely, listening data can be captured from significant proportions of the audience, specially in some radio formats, like CHR. We are talking thousands.

Continuous data capture, real-time testing

In that scenario with no lab environment, with no need to recruit a sample and having the capacity to capture spontaneous reactions from thousands of listeners during natural listening, the continuous and real-time music test is a reality.

As I often insist, the innovation is not in the technology, that is just an enabler. The transformation here is actually on the evaluation cycle. Every song is tested entirely, every time is played.

This continuity in music testing facilitates the assessment of risk at all times. That helps us feel safer, but more important, invite us to be bolder.

When describing traditional music research methodologies I presented three scenarios. I think it’s a good exercise going back to them and think what would be our approach through data-driven music research. You’ll see that just one new condition changes everything: we are measuring actual tune-outs every time the song is played, so we can see a trend spin after spin.

My name is Tommy Ferraz. I’ve been music programmer, programme director and group programme director in radio for almost two decades. In addition I’m founder of Voizzup and this is our Continuous Music Test: www.voizzup.com/musictest